DEFINITION OF 16Bit - 32Bit - 64Bit - 124Bit

If 10 years ago, at that time, the popularity of 16-bit applications began shifting with 32-bit applications. So now it's time to start 32-bit 64-bit resignation replaced. The

presence of operating system Windows 64-bit desktop version, Windows XP

Professional x64 Edition, further ensure that it is time to start

switching to 64-bit. Some argue, Windows XP Professional x64 is not a revolutionary product. It is the result of an evolution, which also never happened before. And indeed it was time, considering it is so long, since the availability of 64-bit processor on the market.

If 10 years ago, at that time, the popularity of 16-bit applications began shifting with 32-bit applications. So now it's time to start 32-bit 64-bit resignation replaced. The

presence of operating system Windows 64-bit desktop version, Windows XP

Professional x64 Edition, further ensure that it is time to start

switching to 64-bit. Some argue, Windows XP Professional x64 is not a revolutionary product. It is the result of an evolution, which also never happened before. And indeed it was time, considering it is so long, since the availability of 64-bit processor on the market.What is 64-bit?

To you who are still wondering, what is the meaning of 64-bit can read the following explanation. If not, you can proceed to the next section. In the processor, the number of bits the length or amount of data that can be processed directly in one step. Like the 32-bit CPU, meaning that the processor can process a 32 bit long instruction in one clock cycle. So the 64-bit processor is a CPU capable of processing capacity throughout the 64-bit instructions in one clock cycle. Output data that have been fully processed the CPU will then be inserted into the memory. By increasing the length of the data capabilities that can be processed the CPU, then indirectly also improves memory performance.

64-bit processor in the x86 Processor Architecturex86 architecture that is the basic design for desktop processors from Intel (and Intel compatible). Starting from 8088 until the era of Intel's Intel Pentium 4 and Intel Pentium D. Also for AMD until AMD Athlon class. Initially, AMD 64-bit processor is referred to as x86-64 extensions. But in 2003, AMD changed the name and call it AMD64. For the first time to draft a proposed 64-bit on x86 architecture started by AMD. Reference technical documentation, start provided by him starting from August 2000. It is intended for software developers to customize and optimize the formatting commands available on AMD64. Intel EM64T is based on AMD64 architecture. The fundamental difference in the two is the specific commands that are only possessed by an Intel processor. As Hyper-Threading technology (HT) or SSE3 instructions. IA64 is the term used by Intel on the Intel Itanium processor architecture and Intel Itanium 2. In contrast to the AMD64 and Intel EM64T are made based on the x86 architecture. IA64 has only limited compatibility with x86.

So, What Is It 128-bit RAM?

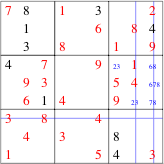

32-bit processor is already working with 64-bit memory modules. This refers to the work done data bus between the processor and RAM. Some of the newest systems, with support from the motherboard northbridge chipset, enabling the processor and RAM can work on a faster data bus. Utilizing dual-channel design. By the manufacturer, this is often referred to as 128 -bit. Although the actual naming is not entirely correct. What is the maximum amount of RAM that can be Used in Windows 64-bit?Maximum amount of RAM that can be exploited by a system depends on three things. Ie processor, motherboard, and operating system. Most motherboards latest chipset, supporting up to 4GB. While most applications with 32-bit Windows operating system, will only access memory up to a maximum of 4 GB. This is specifically for Windows XP.

Probably most of PC users, has not been utilizing the maximum amount of RAM capacity up to 4 GB. However, the number of 4 GB is becoming a disturbing limitation to the use of a PC workstation. As in the use of design CAD / CAM, image manipulation, and high-end video. And of course do not miss the game, which will also take advantage of this. In Windows XP Professional x64 Edition, the maximum limit to 128 GB of RAM. With a capacity to handle virtual memory size up to 16TB (terabytes). With the presence of 64-bit era, with Application Usage Instructions How Long As FP x87, MMX, 3DNow!, And SSE?x87 architecture floating point addition is separate from the processor. Some knew him as a math co-processor chips. Some of them we know it is 8087, 80 287, 80 387, or 487SX. Start on an Intel 486DX, Pentium, and so on, x87 is available in the built-in processor. X87 The main task is to do high-precision calculations. As in the application of CAD (computeraided design) and spreadsheet applications. Especially for x87 has been deemed no longer effective. So on AMD64 architecture, the task is replaced by the so-called "flat register file", which has a total of 16 entries.

For instructions SSE2 (Streaming SIMD Extensions 2), AMD64 keep it. SSE2 can be used, both for the calculation of 32-bit applications and 64-bit. SSE2 is faster than relying on x87. SSE2 also been mengakomadasi instruction in MMX and 3DNow! It further allows the AMD64 and Intel EM64T work more optimally. Good for 32-bit applications, as well as 64-bit applications.

Which Windows Operating System Already Supports 64-bit computing?

For the desktop, the operating system that already supports 64-bit from Microsoft is Windows XP Proffesional x64 Edition. Actually, Microsoft also has several other operating systems already support 64-bit technology. However, it is not entirely devoted to the needs of desktop PCs. Call it like Windows Server 2003, Standard x64 Edition; Windows Server 2003, Enterprise x64 Editon; Windows Server 2003, Datacenter x64 Edition. Some versions of Microsoft's operating system also supports 64-bit technology. Like Windows XP 64-bit Edition 2003 (discontinue); Windows Server 2003, Enterprise Editon with SP1 for Itanium-based systems Intel CPUs. However, the OS is not compatible with 64-bit processor for the desktop, such as AMD64 and EM64T.

For desktop users, most likely will refer to the operating system Microsoft Windows XP Professional x64 Edition. It became available in May 2005. If you berminta know more, can be seen in http://www.microsoft.com/windowsxp/64bit/default.mspx.

As Is Windows XP Professional x64 Edition?

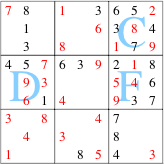

Windows x64 Edition are still using the same user interface compared to Windows XP Professional Edition. Some of the upgrades made to support 64-bit processor for the desktop with the AMD64/EM64T architectures. The increase primarily on the ability of these operating systems handle the memory. More can be seen in the table. Windows XP Professional x64 Edition is made in the same codebase with Windows XP Service Pack 2. So also with the additional facilities available. As the availability of support for wireless devices, Windows Firewall, Windows Security Center, a Bluetooth infrastructure, Power Management, and support for. NET Framework 1.1.

How The fate of 32-bit applications?

Considering there are many applications that are still running on 32-bit basis, this would be a pretty important question. Operating system Windows x64-bit is still possible to run 32-bit applications in 64-bit Windows environment. Backward compatibility is limited to 32-bit applications. And 64-bit OS is not able to run 16-bit applications or MS-DOS. It should be noted also available installer file. If lets say the file setup.exe is still in the format 16-bitinstaller, the installation process will not be done. What about games? Some popular games are still running in 32-bit applications can still run. This is possible because of the availability of such a Program Compatibility Wizard (PCW). In Windows XP x64 Edition is referred to as Windows on Windows 64 (WOW64). Form a sub-system emulator working layer 32-bit applications in 64-bit OS environment. This is to ensure the two are not mutually.

This is also done to prevent the collision between the two. One of them by separating the use of DLLs (Dynamic Link Library) between the two. 32-bit applications will not be able to access 64-bit DLLs. And vice versa.

Is There a Performance Improvement between 32-bit and 64-bit?

If you expect miracles increase PC performance, using the OS and software 64-bit, then you will be disappointed. No significant performance improvements between 64-bit and 32-bit. At least with the use of PCs and applications are available now. So what benefits can be obtained from the 64-bit? 32-bit Windows can only allocate a maximum of 4 GB of RAM and 2 ~ 3GB address space (depending on application). Compare with x64 Edition. He was able to handle up to a maximum of 128 GB of RAM and 8 TB addressspace for every process application.

Physical memory and address space larger allows 64-bit applications to access memory more freely. Reduce the paging file to the hard drive, and indirectly will improve overall performance.As for speed, most likely there is no significant improvement. Given the processor clock speed which is used on 64-bit applications similar to those used during run 32 - bit on AMD64 and EM64T processors.

What about 64-bit Application Development.

Need to Wait Long Availability of 64-bit applications? Up to now, the availability of apalikasi that works natively on 64-bit OS is still limited. But with the launch of Intel's Pentium D processors for desktops that are equipped with EM64T instructions, will certainly help fast this. Likewise, the availability of Windows XP Professional x64 Edition. It is estimated that the end of 2005, we began to see applications that work natively on 64-bit.With the increasingly widespread availability of 64-bit processor for the desktop, and comes with the launch of the operating system Windows XP Professional Edition, then it started popping up applications that already support 64-bit computing. For games this is also true. One game that has been optimized 64-bit is a game titled "Shadow Ops: Red Mercury". Utilizing the optimization of memory and improved multimedia capabilities possessed 64-bit operating system. Will be much improvement can be felt. Starting from the detailed picture that can be displayed, both the texture and detail the background, until the increase in AI (artificial intelligence).

=================================== Like -- Join Us and Help UsSearching for